Probability

Classical definition of Probability

- Probability measures the likelihood of an event happening.

The classical definition of probability, also known as the Laplace definition, states that if an experiment has a finite number of equally likely outcomes, the probability of an event occurring is given by:

Combined events

Independence Relation

if Event A and B are independent, then

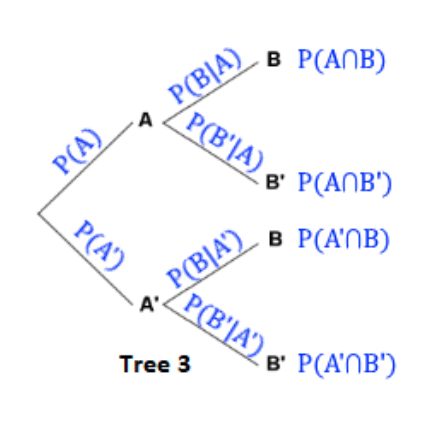

Conditional Probability

Thus

Or

Bayes Theorem

Thus

Since

Random Variables

Probability Mass Function (PMF)

It denotes the probability that a discrete random variable

Probability Density Function (PDF)

For continuous random variable,

where

To find the probability that

Expectation

The expectation function, reveals the expected value of X in the long term. It is the product of x and the probability of x occurring. #Expectation

Discrete:

Continuous:

Properties of Expectation

Constant

Scalar Multiplicativity

Linearity of Expectation

Product of Two independent Variables

If

Variance

Formula

Variance is the spread of the random variable

We can also rewrite it as

It can be equated to

Properties of Variance

Constant

Scalar Multiplicativity

Translation Invariance

Additivity

If

Cumulative distribution Function

Is the summation of the Probability Mass and Density function. It is an S-shaped curve

Discrete

Continuous

Look at Distributions

Binomial Distributions

Modern Axiomatic Definition

It is based on the Kolmogorov probability axioms. Which extends the definition to unequal probability distributions, and also probability with infinite outcomes.

Kolmogorov Probability Axioms

let

if are disjoint