Linear Algebra

Linear Algebra (Elementary)

Vectors

Vectors are usually represented as column of numbers that encode a direction and magnitude.

The magnitude of a vector can be determined by summing the square of each term in the column and square rooting the result.

Dot Product

The Dot product of two vectors is

And

Look at derivation of the formula here

Cross Product

The cross product of two vectors creates another vector that is perpendicular to both vectors.

Where

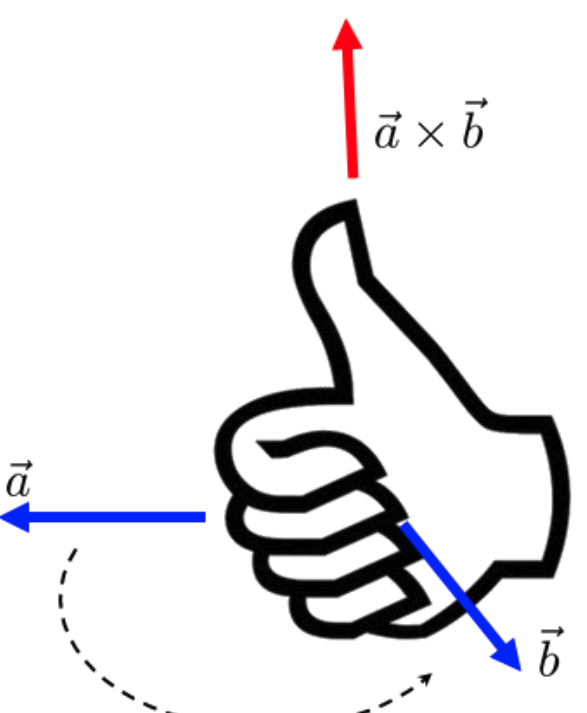

Your fingers curl from vector a to b, while your thumb represents the direction of

Thus

The determinant trick to finding cross product.

- Cover up the first row

- Multiply both sides diagonally and subtract it. (First element)

- Cover up second row

- Multiply both sides diagonally and subtract it. Multiply by -1. (Second element)

- Cover up third row

- Repeat step 2 (third element)

Area bounded by Vectors

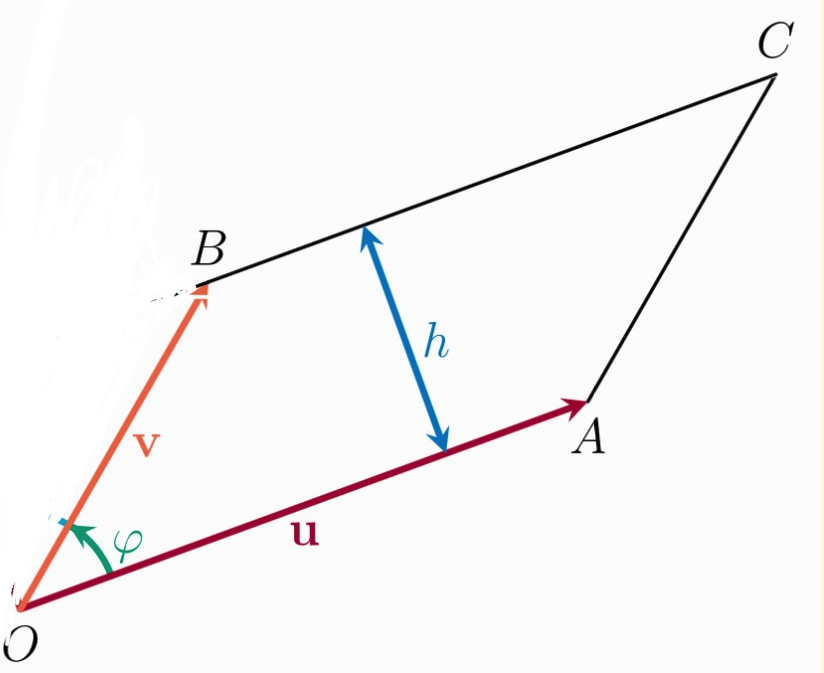

The area of the parallelogram can be deduced. Let

Thus

Thus the area of the parallelogram can be determined by the magnitude of the cross product of

Sample qn: Prove that the volume of a cuboid is. (This result is widely used in determinants and cross products)

Points, Lines, Planes

Point

A point is self-evidently a point in 3d space.

Lines

Starting from what we know best

A line in a vector space can be described as

Distance between Point and Line

The distance between

Planes

Starting from what we know. The equation of a plane in Cartesian form is

Using the properties of dot product, it can be rewritten as

or

where

Line of Intersection between two planes

Given two planes

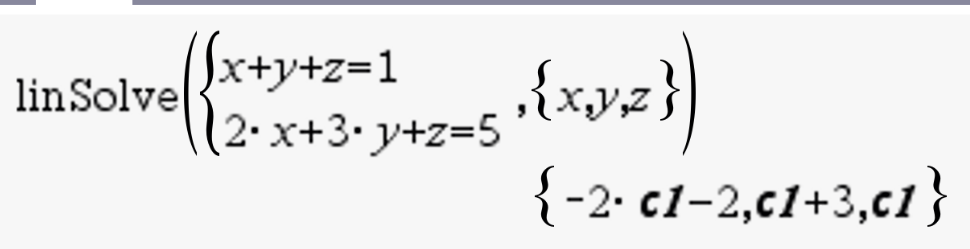

The line of intersection can be found by keying this into GDC (menu - 3 - 2)

Thus

Intersection of Planes

Given three planes (

However, the planes has

Distinguishing between no solutions & Infinite solutions

! If

:

- Find

: the line of intersection between and

- Check if the below condition is true. If yes, then

lies on , suggesting that there are infinite solutions. If not, there are no solutions

Distance between two parallel planes

where

Linearity

Formal definition of Linearity #Linearity

- Additivity:

- Scaling:

Where

Matrices

Recall how a function in algebra transforms x to y?

In linear algebra, instead of x and y's which are scalar values, linear algebra transforms vectors into another vector.

However, to make the distinction clearer, mathematicians use A instead of f.

We need special tools to work with vectors, and this is where matrices comes in. A matrix encodes a Linear transformation on a vector, similar to how a function (f) encodes a transformation of x to y.

eg.

where A is a matrix and

Note that the dim of a vector has to be the same as the number of columns of matrix (A).

Matrix Multiplication (How does it transform between

Matrix multiplication

- Take the nth row of numbers in A

- Pivot it clockwise by 90 degrees into the mth column in

- Multiply it respectively with the variable associated with it

- Add up all the numbers in the column

- The value would be on the nth row and mth column in

Thus

Bravo!! We have transformed

System of Linear Equations

A matrix also encodes a system of linear equations. Suppose there are 2 equations as shown, and we want to solve for a and b.

A matrix representation of the above is as given

look, aren't they equivalent??

Solving for the coefficients are as easy as

Where the inverse of the matrix is essentially the reverse of its transformation, similar to how

Transpose

Transpose means to flip a vector or a matrix around a diagonal #Transpose

Thus

In the case of matrices

Another property is that

Determinant

Determinant measures the transformation of space and volume.

A matrix that maps basis vector

The volume by the three basis vectors is given as

The determinant, measures ratio between the initial volume of three basis vectors, and its final volume after being scaled by

where

Fun fact

The determinant tells us about the invertibility of the Matrix

Eigenvector and Eigenvalue

An eigenvector of a matrix is a nonzero vector that, when the matrix is applied to it, is scaled by a factor

A linear system

Thus to find non zero solution of

Solving for

Linear Algebra (Modern)

Infinite Vectors and Functional Spaces

"The only thing limiting us in Math is our imagination" ~ Joshua

Functions are vectors. What? Yes it's true. All functions are an infinite vector and these infinite vectors live within something called a Functional Space.

Introduction to Infinite-dimensional vectors functions

Take a function

Orthogonal Basis

Suppose

Recall that in Euclidean space, a linear combination of two orthogonal (perpendicular) vectors,

Thus we need to find two functions where its dot product is 0.

When dealing with finite vectors, the dot product is defined as such, where each element in the vector is multiplied and summed together.

Similarly, in the context of infinite vectors, it is defined as

Fourier discovered that the two functions

Thus,